Have you noticed that, inside every new Raptor-Dev release announcement, there’s a link to download the Raptor-Test regression reports? In that download, we outline information for all of the individual regression tests run on software and whether or not those tests have passed. But what exactly is regression testing, and why is it necessary? Before you break out your old stats notebook to find out, know that regression tests have nothing to do with variable correlation–you’re thinking regression analysis.

What is Regression Testing?

Throughout the software development life cycle, engineers create a suite of test cases to validate software against the associated test case prior to developing additional features. However, software development isn’t always linear. As software developers generate code, functions are added to enhance functionality or to respond to requirement changes. Not to mention, revisions are often necessary due to obligatory bug corrections or performance issues. Through this continuous evolution and expansion of software, it’s necessary to retest and confirm that new code doesn’t alter the existing functionality of the system. To do so, engineers will frequently run the previous test cases to prevent software modification from having adverse effects on existing code. This is referred to as regression testing.

Think of the word regression in terms of its meaning “the act of going back to a previous place or state.” It tests the software backwards, making sure new functions don’t break existing performance.

The Universal Regression Rig

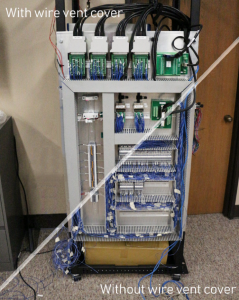

Over the years at New Eagle, we focused on improving the regression testing method. Through this development, we built a Universal Regression Rig (U.R.R) to test software packages generated in Raptor-Dev on our Raptor based ECUs. When first developing this system, we concentrated on

- Replacing our existing test boards with a more robust and repeatable system

- Upgrading our testing procedures using a selection of advanced hardware

- Organizing and consolidating our testing hardware

- Streamlining our testing process to save time and improve the quality of our software systems

Built with aerospace-grade testing hardware, this rig is capable of complete I/O testing on up to eight Raptor ECUs. It also handles most (soon to be all) of the hardware circuit types supported by the various Raptor ECUs. Designed to ensure that if something doesn’t pass, the U.R.R. runs tests automatically so that our engineers can address the software rather than manually debug the rig itself.

Why Regression Testing is Necessary

Now that you know what regression testing is and how it’s done, let’s talk about why it’s necessary. Regression testing is a part of all software development and maintenance. If a team fails to validate the functionality of the source code prior to release, errors can occur. The result of those errors can cause negative effects on those using the system. This is why it’s necessary.

However, disadvantages of regression testing do exist. It can become incredibly extensive depending on the complexity of the software. Why? Test cases are continuously added as software progresses. With both developing and running a hefty suite of test cases, regression testing becomes time consuming, expensive, and requires an advanced set of resources. In fact, much of a project’s budget and resource allocation is often set aside for regression testing.

Regression Testing Techniques

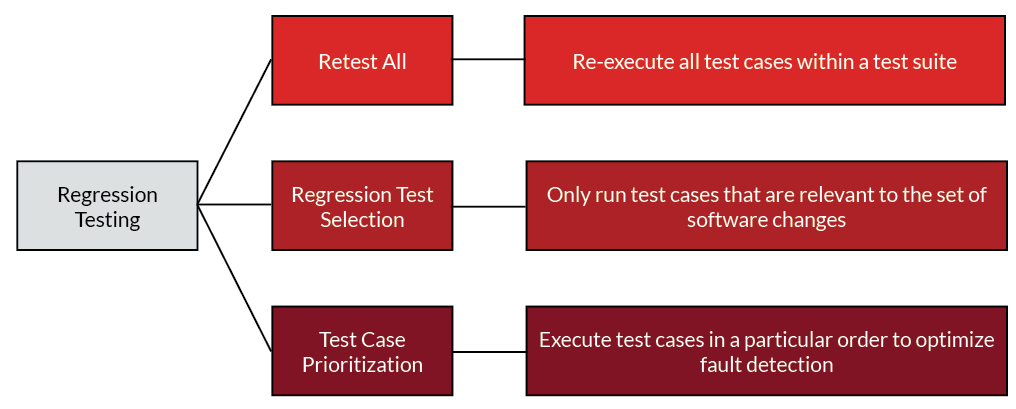

To offset the above issues, there are techniques to mitigate the time and cost associated with regression testing. To start with, try these three main techniques: Retest All, Regression Test Selection, Test Case Prioritization.

Going back to our improvements on regression testing, we first test critical systems, followed by functional systems, and, finally, non-functional systems. This allows us to monitor software change modifications. If a program requires, we can execute performance testing. This test tracks the quality of the output by testing the system and monitoring the feedback response. As a whole, this entire process confirms that the system performs to customer expectations. It also measures the outcome in regards to user experience. For instance, in our drive-by-wire (DBW) system, we run tests to validate system command sends to turn the steering wheel. The wheel both turns the proper amount, indicating proper functionality and smooth turns, creating an enjoyable driving experience for passengers.

Testing Raptor Releases

When applying regression tests to Raptor, we run them against each internal build of Raptor-Dev with our Continuous Integration (CI) server. Our engineers integrate source code into our shared repository. From here, the CI server monitors our source code after each check-in. This automatically builds and tests the product to identify any potential errors. If an error occurs, the server alerts our team of the regression. The alert allows them to detect and correct problems early on. With this automation, we receive the added benefit of accelerated software releases.

The Three Stages of Regression Testing

We continue testing on every version of our Raptor Software Tools by pushing them through the three stages of regression testing. These three stages are software-only, processor-in-the-loop, and hardware tests.

Software-only tests ensure that each model previously built will continue to build. Every potential software release is tested across every possible Matlab Version currently supported by Raptor-Dev. These tests determine if the software is functioning correctly and identifies if any platform specific issues arise. Our engineers use software-only testing to guarantee that the new Raptor-Dev release will function for all customers across all supported platforms.

Processor-in-the-loop testing assures that each software version runs properly on the intended hardware. Raptor-Test scripts run against the hardware integrated with the new software release to validate whether all major functions work properly. This stage tests major features, such as interpolation tables, fault manager, and the J1939 protocol library.

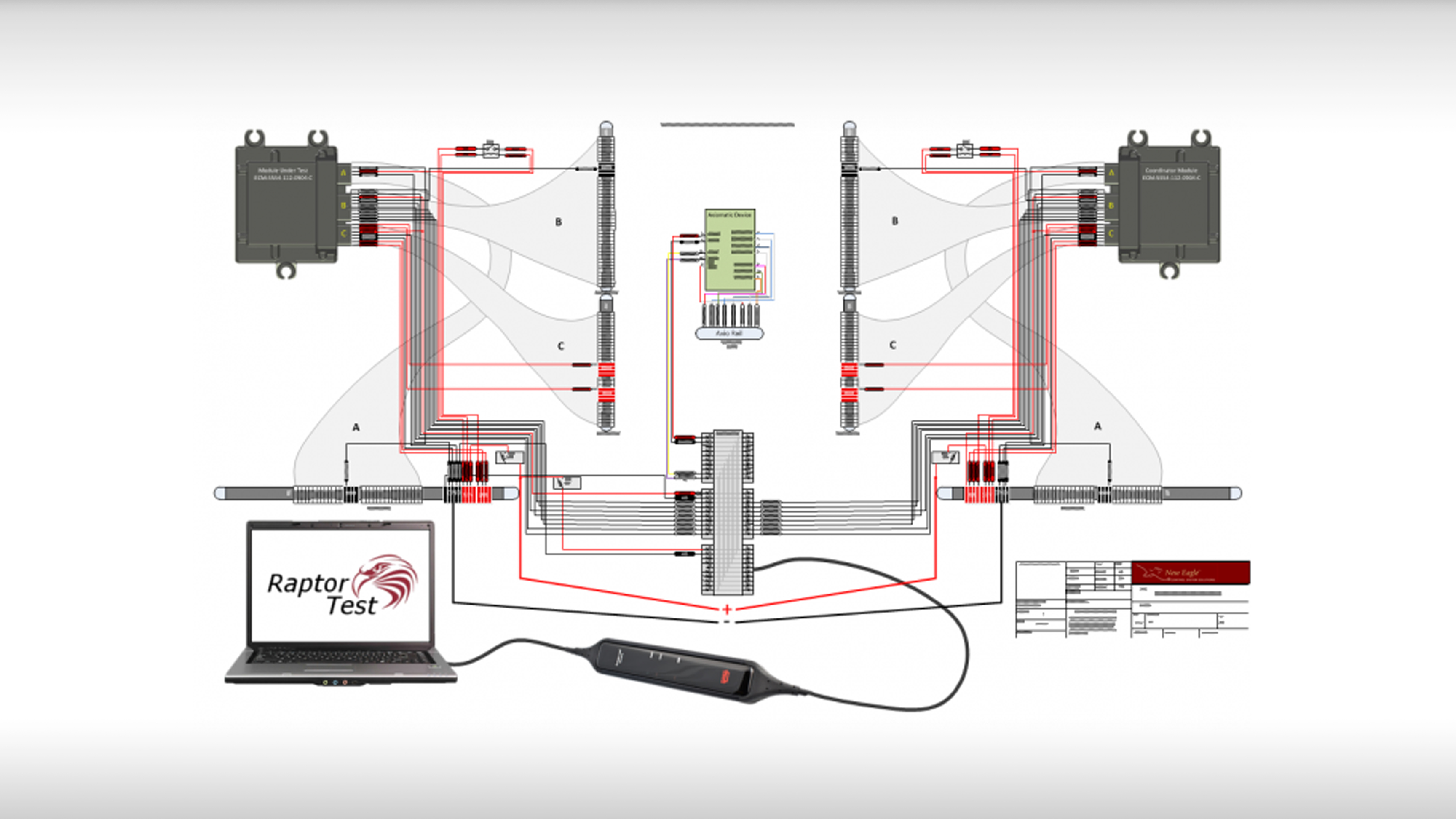

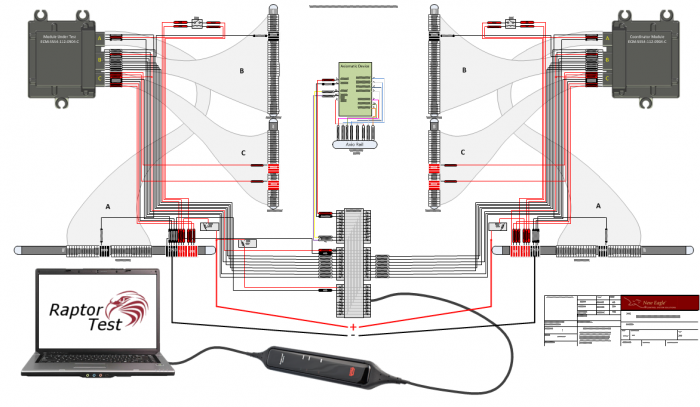

Hardware tests, performed on our U.R.R., establish whether the hardware inputs and outputs function through all possible scenarios according to customer specifications with the new build of Raptor-Dev. Once a software package is flashed onto an ECU, the test module interfaces with a coordinator module. Then, test scripts are run to transmit and receive sensor and actuation signals between the two modules. Signals, such as analog inputs, are then rendered to the target hardware and become verified. Lastly, the coordinator hardware receives the test outputs to determine proper functionality for actuation signals. These signals include PWM and digital outputs (I/O).

Building Validation with Raptor-Test

Raptor-Test is a powerful software tool that, in conjunction with a test bench setup, facilitates testing of model-based software against a system’s requirements through simulated hardware-in-the-loop (SimHIL). SimHIL testing is a control systems validation strategy. It uses simulated I/O to verify that the software functions match the application requirements. By using a graphical PC interface, users quickly create and execute a test script over a USB-to-CAN interface, automating a once error-prone, manual testing process.

Raptor-Test configuration can vary with the application’s testing requirements. Some applications only require a PC to run Raptor-Test, a Kvaser cable for CAN-to-USB connection, and the ECU under test. For more complex applications, Raptor-Test can interface with both a Test Module and a Coordinator Module (which typically runs a plant model), transmitting and receiving simulated sensor and actuation signals between the two modules.

Building a validation test plan with Raptor-Test uses automation to reliably and repeatedly test your software changes. Ultimately, this improvement gives you greater confidence in your software, putting you on the path to production.

Develop Your Solution with the Intended Results

Software modifications occur throughout the development cycle, and regression testing is often tedious and overwhelming. However, it’s vital for ensuring your end product functions as intended. If you’re looking to develop a program that requires efficient and affordable regression testing prior to your solution’s release, contact New Eagle.